2026 will mark the transition from AI experimentation to pragmatic implementation with significant emphasis on return on investment, governance, and agentic AI systems. The hype bubble has deflated, replaced by hard-nosed business requirements and measurable outcomes. CFOs become AI gatekeepers, speculative pilots get killed, and the discussion moves to “which AI projects drive profit?” In that context, strategic shifts matter most for boards and executive teams—and seven conditions will separate winners from the rest.

Shift 1 – From Hype to Hard Work: AI Factories in an ROI-Driven World

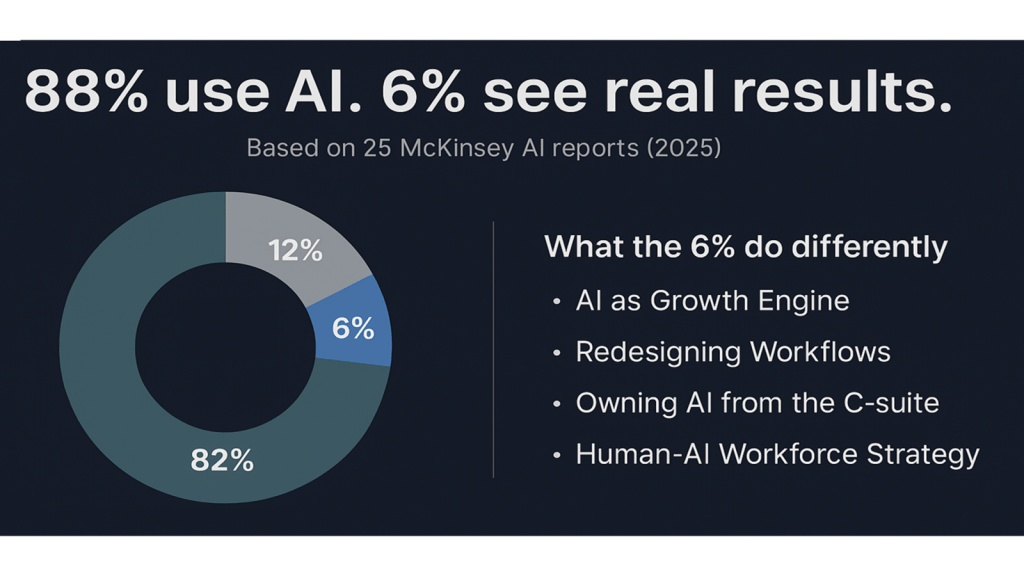

The first shift is financial discipline. Analysts expect enterprises will defer roughly 25% of planned AI spend into 2027 as CFOs insist on clear value, not proof-of-concept experiments. Only a small minority of organisations can currently point to material EBIT impact from AI, despite wide adoption.

The era of “let’s fund ten pilots and see what sticks” is ending. Funding flows to organisations that behave more like AI factories: they standardise how use cases are sourced, evaluated, industrialised and governed, with shared platforms rather than bespoke experiments.

What this means for leadership in 2026

- Every AI initiative needs explicit, P&L-linked metrics (revenue, cost, margin) and a timebox for showing impact.

- Expect your CFO to become a co-owner of the AI portfolio—approving not just spend, but the value logic.

- The key maturity question is shifting from “Do we use AI?” to “How many AI use cases are scaled, reused and governed?”

Shift 2 – AI Teammates in Every Role: Work Gets Re-Architected

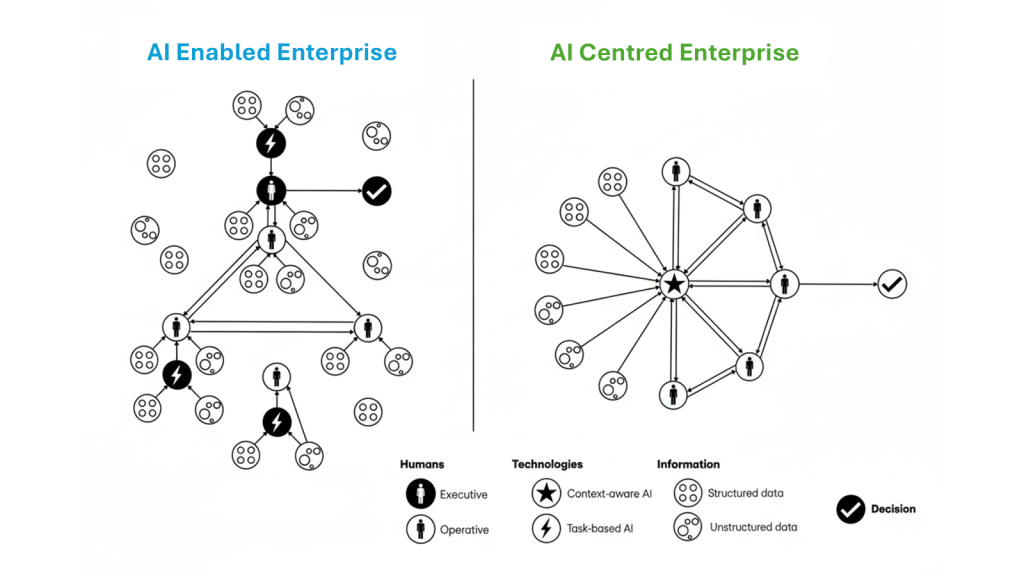

By the end of 2026, around 40% of enterprise applications are expected to embed task-specific AI agents, and a similar share of roles will involve working with those agents. These are not just chatbots; they are digital colleagues handling end-to-end workflows in sales, service, finance, HR and operations.

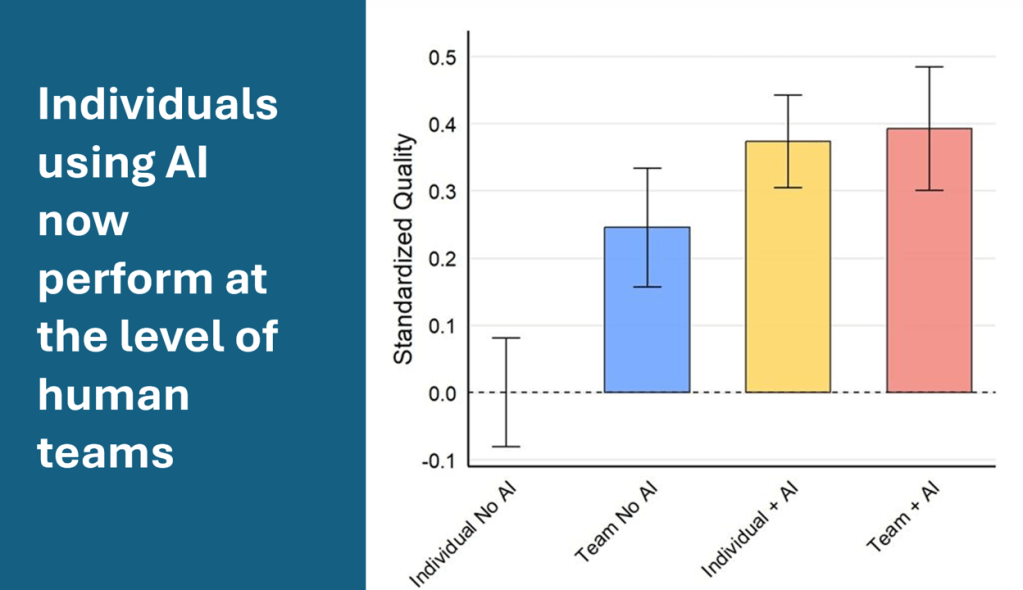

Research from McKinsey and BCG suggests a simple rule of thumb: successful AI transformations are roughly 10% algorithms, 20% technology and data, and 70% people and processes. High performers are three times more likely to fundamentally redesign workflows than to automate existing ones.

What this means for leadership in 2026

- Ask less “Which copilot can we roll out?” and more “What would this process look like if we assumed agents from day one?”

- Measure success in cycle time, error rates and processes eliminated, not just productivity per FTE.

- Treat “working effectively with agents” as a core competency for managers and professionals.

Shift 3 – New Org Structures: CAIOs, AI CoEs and Agent Ops

As AI moves into the core of the business, organisational design is following. A small but growing share of large companies now appoint a dedicated AI leader (CAIO or equivalent), accountable for turning AI strategy into business outcomes and for managing risk.

The workforce pyramid is shifting as well. Entry-level positions are “quietly disappearing”—not through layoffs, but through non-renewal—while AI-skilled workers command wage premiums of 50%+ in some markets and rising.

This drives three structural moves:

- AI Centres of Excellence evolve from advisory teams into delivery engines that provide reference architectures, reusable agents and enablement.

- “Agent ops” capabilities emerge—teams tasked with monitoring, tuning and governing fleets of agents across the enterprise.

- Career paths split between traditional functional tracks and “AI orchestrator” tracks.

What this means for leadership in 2026

- Clarify who owns AI at ExCo level—and whether they have the mandate to say no as well as yes.

- Ensure your AI CoE is set up to ship and scale, not just write guidelines.

- Start redesigning roles, spans of control and career paths on the assumption that agents will take over a significant share of routine work.

Shift 4 – Governance and Risk: From Optional to Existential

By the end of 2026, AI governance will be tested in courtrooms and regulators’ offices, not only in internal committees. Analysts expect thousands of AI-related legal claims globally, with organisations facing lawsuits, fines and in some cases leadership changes due to inadequate governance.

At the same time, frameworks like the EU AI Act move to enforcement, particularly in high-risk domains such as healthcare, finance, HR and public services. In parallel, many organisations are introducing “AI free” assessments to counter concerns about over-reliance and erosion of critical thinking.

What this means for leadership in 2026

- Treat AI as a formal risk class alongside cyber and financial risk, with explicit classifications, controls and reporting.

- Expect to demonstrate traceability, explainability and human oversight for consequential use cases.

- Recognise that governance failures can quickly become CEO- and board-level issues, not just CIO problems.

Shift 5 – The Data Quality Bottleneck

The fifth shift is about the constraint that matters most: data quality. Across multiple sources, “AI-ready data” emerges as the primary bottleneck. Companies that neglect it could see productivity losses of 15% or more, with widespread AI initiatives missing their ROI targets due to poor foundations.

Most companies have data. Few have AI-ready data: unified, well-governed, timely, with clear definitions and ownership.

On the infrastructure side, expect a shift from “cloud-first” to “cloud where appropriate,” with organisations seeking more control over cost, jurisdiction and resilience. On the environmental side, data-centre power consumption is becoming a visible topic in ESG discussions, forcing hard choices about which workloads truly deserve the energy and capital they consume.

What this means for leadership in 2026

- Treat critical data domains as products with clear owners and SLAs, not as exhaust from processes and applications.

- Make data readiness a gating criterion for funding AI use cases.

- Infrastructure and model choices are now strategic bets, not just IT sourcing decisions.

Seven Conditions for Successful AI Implementation in 2026

Pulling these shifts together, here are seven conditions that separate winners from the rest:

FINANCIAL FOUNDATIONS

1. Financial discipline first

- Tie every AI initiative to specific P&L metrics and realistic value assumptions.

- Kill or re-scope projects that cannot demonstrate credible impact within 12–18 months.

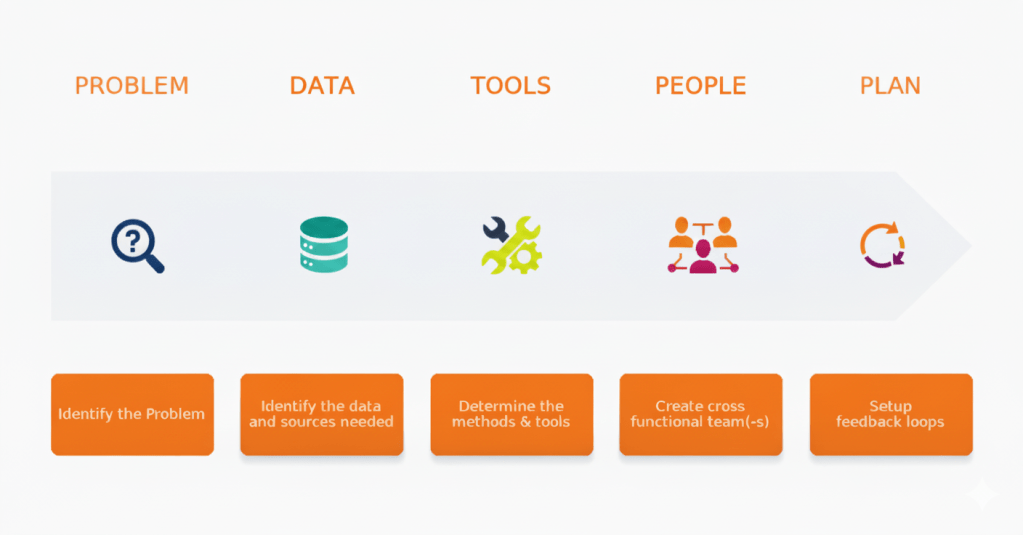

2. Build an AI factory

- Standardise how you source, prioritise and industrialise use cases.

- Focus on a small number of high-value domains and build shared platforms and solution libraries instead of one-off solutions.

OPERATIONAL EXCELLENCE

3. Redesign workflows around agents (the 10–20–70 rule)

- Assume that only 10% of success is the model and 20% is tech/data; the remaining 70% is people and process.

- Measure progress in terms of processes simplified or eliminated, not just tasks automated.

4. Treat data as a product

- Invest in “AI-ready data”: unified, well-governed, timely, with clear definitions and ownership.

- Make data readiness a gating criterion for funding AI use cases.

5. Governance by design, not retrofit

- Mandate governance from day one: model inventories, risk classification, human-in-the-loop for high-impact decisions.

- Build transparency, explainability and audit trails into systems upfront.

ORGANISATIONAL CAPABILITY

6. Organise for AI: leadership, CoEs and agent operations

- Clarify executive ownership (CAIO or equivalent), empower an AI CoE to execute, and stand up agent-ops capabilities to monitor and steer your digital workforce.

7. Commit to continuous upskilling

- Assume roughly 44% of current skills will materially change over the next five years; treat AI literacy and orchestration skills as mandatory.

- Invest more in upskilling existing talent than in recruiting “unicorns.”

The Bottom Line

The defining question for 2026 is no longer “Should we adopt AI?” but “How do we create measurable value from AI while managing its risks?”

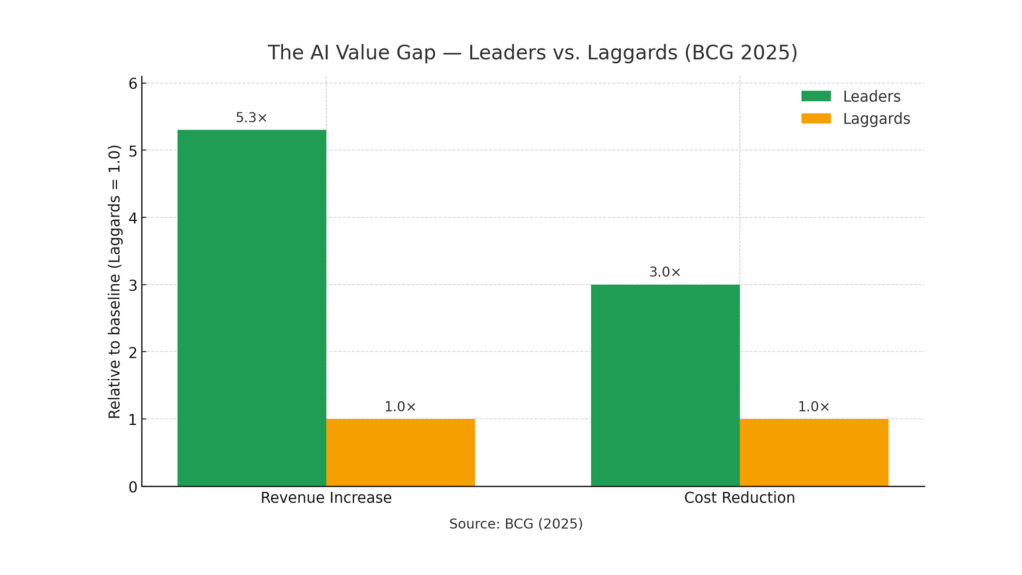

The performance gap is widening fast: companies redesigning workflows are pulling three to five times ahead of those merely automating existing processes. By 2027, this gap will be extremely hard to close.

Boards and executive teams that answer this through focused implementation, genuine workflow redesign, responsible governance and continuous workforce development will set the pace for the rest of the decade. Those that continue treating AI as experimentation will find themselves competing against organisations operating at multiples of their productivity, a gap will be very hard to recover from.