Rush shipments are not a logistics problem. It’s a warehouse planning problem that logistics pays for. The pattern is predictable: the warehouse plan breaks, the organization compensates with speed premium carriers, split shipments, overtime, last-minute routing. People become heroes for covering mistakes. Over time, rush shipments become the default recovery mechanism; structural waste disguised as operational excellence

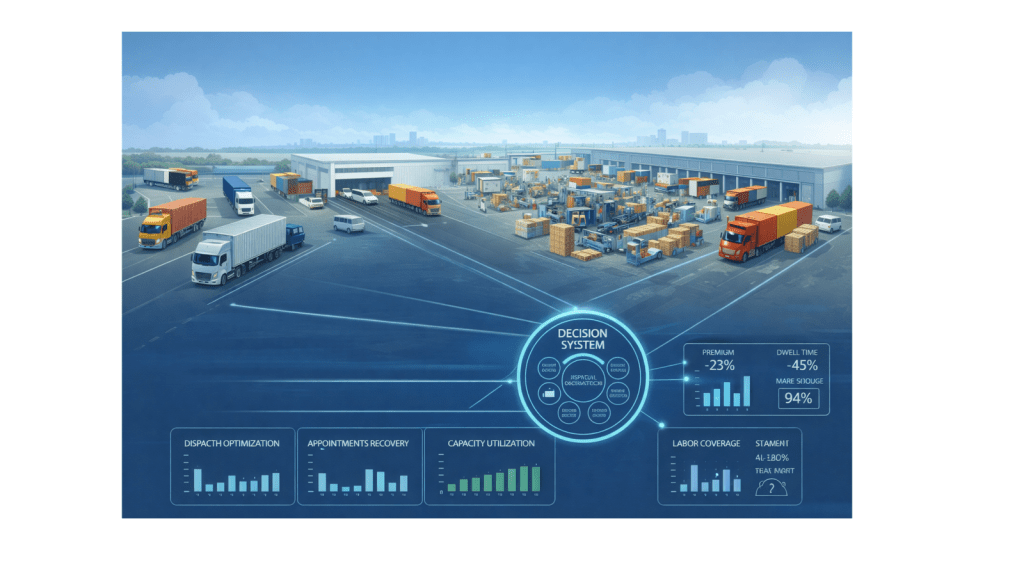

That’s why the road/warehouse logistics digital twin matters. Not only because it finds a better route, but because it prevents urgency from becoming structural. It synchronizes transport, appointments, dock capacity, labor availability, and execution priorities around the same operational truth, so you plan for flow first, and only then use speed when it truly pays back.

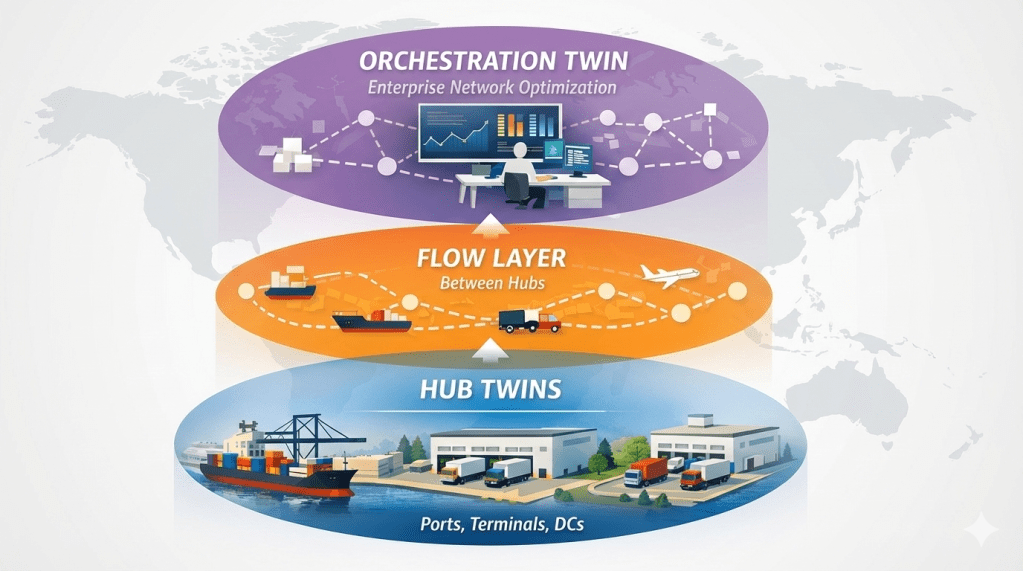

(Note: This Part 3 in my Digital Twin series is about the micro shocks that hit every hour on the loading dock and drain margin. Part 2 dealt with macro shocks and ship–port synchronization)

The prize: cost-to-serve discipline, fewer margin shocks

1) Fewer premium moves and tighter cost-to-serve control.

On land, variability becomes cost leakage fast. The twin reduces premium transport and “recovery spend” by preventing the avoidable failures upstream: dock gridlock, wave collapse, and labor mismatch. In many networks, a meaningful share of premium freight is reactive recovery, moves that wouldn’t have been needed if the planned flow had held together.

2) Reliable promises without over-serving everyone.

The twin makes service levels real. Instead of trying to rescue every order with the same urgency, you protect the critical shipments and re-promise early for the rest, improving trust while reducing expensive heroics.

3) Labor volatility becomes manageable, not chaotic.

In many networks, labor availability and skills mix is the constraint. A twin treats labor as a clear planning input, so the day’s plan is realistic before execution begins.

Four issues where the Digital Twin can help you!

1. The rush-shipment spiral

A small delay inside the warehouse cascades into a premium spend outside it. The chain reaction is predictable: inbound arrives late >> waves slip >> outbound cutoffs >> operations split loads, upgrade carriers, or dispatch partials >> costs spike and service still becomes fragile.

A twin breaks this spiral by making trade-offs explicit early. It identifies which orders to protect, which to re-promise, where consolidation still works, and when a premium move is justified.

2. Waiting for dock availability

Trucks wait because docks are full, paperwork isn’t ready, labor is short, or the yard can’t sequence efficiently. These costs are fragmented—carriers charge, sites absorb, customers complain—so they often remain invisible at enterprise level.

A twin reduces detention by synchronizing three truths: what is arriving, what capacity is actually available, and what should be prioritized. It rebalances appointments as reality changes so arrivals match real readiness.

3. The labor mismatch cascade

Many sites have capacity until they don’t, because labor coverage and skills mix fluctuate. A 10–15% shortfall in scarce roles can destroy throughput far more than the same shortfall elsewhere. The late discovery leads to overtime, shortcuts, quality issues, and rework and often triggers premium transport to protect cutoffs.

A twin treats labour fill rate and skills coverage as first-class constraints. It reshapes waves, priorities, and dock sequencing early, instead of discovering during the day that the plan was never feasible. The result is less overtime volatility and fewer last-minute rescues.

4. The inventory/flow-path trap

This is where cost-to-serve stops being a spreadsheet exercise. You consolidate inventory at a regional DC to reduce handling costs. It works until a demand spike forces cross-country expediting because the stock is now 1,200 miles away. Or inbound gets sent to put-away instead of cross-dock because “we have space,” but demand materializes before replenishment runs, triggering split shipments and premium moves.

These are flow-path decisions that create transport liabilities. A twin makes the trade-off explicit in real time: hold vs move, cross-dock vs put-away, split vs consolidate—based on actual margin impact, not yesterday’s flow logic.

Example: Monday morning peak

A promotion week starts with volume above plan and labor fill rate 15% short. Without a twin, appointments stay static while ETAs shift, congestion builds in the yard and at the docks, and the wave plan runs “as designed” until it collapses under backlog. Outbound cutoffs turn red, operations split loads and activate premium carriers, overtime spikes, and service still becomes fragile. The cost spike is then rationalized as “the cost of peaks.”

With a twin, the day starts differently. The labor shortfall is treated as the binding constraint at the start of shift, appointments are rebalanced to smooth peaks and protect critical inbound and outbound flows, and dock sequencing is reshaped around true cutoff risk rather than yesterday’s plan. Waves and labor priorities are adjusted early and some orders are re-promised explicitly, so premium moves are targeted and justified and overtime becomes deliberate rather than chaotic. The outcome isn’t “no disruption.” It’s fewer premium moves, less overtime volatility, and a controlled service impact instead of a margin surprise.

So what does it take to make this real?

What it takes

Three things separate this from spreadsheet planning:

(1) Decision-grade data/insights on labor coverage, dock state, and appointment flow, not just transport ETAs.

(2) Decision logic that is fast enough to replan before chaos locks in.

(3) Clear authority on who can adjust appointments, waves, and promises when constraints change.

KPIs: 3 north stars (and a small supporting set)

1) Premium freight rate — % of shipments and % of spend that is premium/expedited.

2) Cost-to-serve variance by segment — which customers/products/orders are unprofitable once recovery effort is included.

3) Labor productivity under volatility — throughput per labor hour during peaks, plus overtime volatility.

Supporting diagnostics: detention/dwell, missed cutoffs, and plan adherence under stress (how often you stayed in controlled flow vs reverted to heroics).

How to implement without boiling the ocean

Start at one site where premium spend, detention, and service shortfalls already visible and measurable, this creates a clear baseline and fast credibility. Then make the key operational signals decision-grade: labour coverage and skills mix, appointment flow, dock state, backlog, and cutoff risk. Next, define simple rules that make trade-offs explicit, especially when to re-promise versus when to expedite, tied to service tier and margin. From there, close the loop into the daily operating cadence by connecting those rules to wave replanning, dock sequencing, and appointment adjustments as reality changes. Finally, export the commitments you can now trust into the enterprise layer (which I will address in Part 4 of my series), so network orchestration is built on real constraints rather than assumed averages.

The questions executives should ask

- What percentage of our premium freight spend is planned vs reactive?

- Which shipments are profitable on paper but unprofitable after recovery cost?

- Do we re-promise early by policy or do we “save it” with premium transport by habit?

- Are labor planning and operational planning aligned or still separate?

- Do our incentives reward hitting service at any cost, or hitting service and margin?