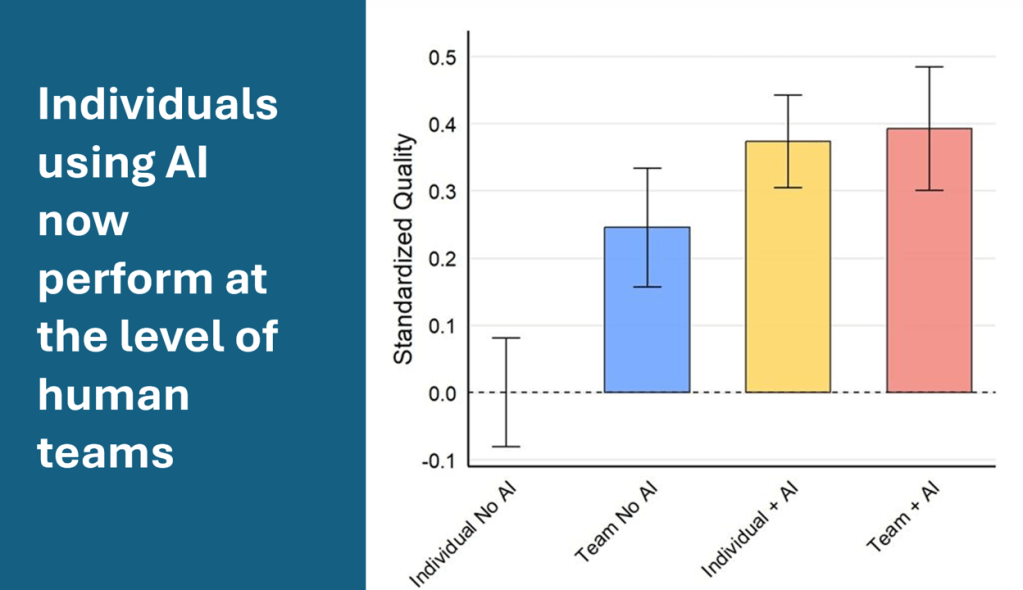

The implications of AI are profound: when individuals can deliver team-level output with AI, organisations must rethink not just productivity, but the very design of work and teams. A recent Harvard Business School and Wharton field experiment titled The Cybernetic Teammate offers one of the clearest demonstrations of this shift. Conducted with 776 professionals at Procter & Gamble, the study compared individuals and teams working on real product-innovation challenges, both with and without access to generative AI.

The results were striking:

- Individuals using AI performed as well as/better than human teams without AI.

- Teams using AI performed best of all.

- AI also balanced out disciplinary biases—commercial and technical professionals produced more integrated, higher-quality outputs when assisted by AI.

In short, AI amplified human capability at both the individual and collective level. It became a multiplier of creativity, insight, and balance—reshaping the traditional boundaries of teamwork and expertise.

The Evidence Is Converging

Other large-scale studies reinforce this picture. A Harvard–BCG experiment showed consultants using GPT-4 were 12% more productive, 25% faster, and delivered work rated 40% higher in quality for tasks within the model’s “competence frontier

How Work Will Be Done Differently

These findings signal a fundamental redesign in how work is organised. The dominant model—teams collaborating to produce output—is evolving toward individual-with-AI first, followed by team integration and validation.

A typical workflow may now look like this:

AI-assisted ideation → human synthesis → AI refinement → human decision.

Work becomes more iterative, asynchronous, and cognitively distributed. Human collaboration increasingly occurs through the medium of AI: teams co-create ideas, share prompt libraries, and build upon each other’s AI-generated drafts.

The BCG study introduces a useful distinction:

- Inside the AI frontier: tasks within the model’s competence—ideation, synthesis, summarisation—where AI can take the lead.

- Outside the AI frontier: tasks requiring novel reasoning, complex judgment, or proprietary context—where human expertise must anchor the process.

Future roles will be defined less by function and more by how individuals navigate that frontier: knowing when to rely on AI and when to override it. Skills like critical reasoning, verification, and synthesis will matter more than rote expertise.

Implications for Large Enterprises

For established organisations, the shift toward AI-augmented work changes the anatomy of structure, leadership, and learning.

1. Flatter, more empowered structures.

AI copilots widen managerial spans by automating coordination and reporting. However, they also increase the need for judgmental oversight—requiring managers who coach, review, and integrate rather than micromanage.

2. Redefined middle-management roles.

The traditional coordinator role gives way to integrator and quality gatekeeper. Managers become stewards of method and culture rather than traffic controllers.

3. Governance at the “AI frontier.”

Leaders must define clear rules of engagement: what tasks can be automated, which require human review, and what data or models are approved. This “model–method–human” control system ensures both productivity and trust.

4. A new learning agenda.

Reskilling moves from technical training to cognitive fluency: prompting, evaluating, interpreting, and combining AI insights with business judgment. The AI-literate professional becomes the new organisational backbone.

5. Quality and performance metrics evolve.

Volume and throughput give way to quality, cycle time, rework reduction, and bias detection—metrics aligned with the new blend of human and machine contribution.

In short, AI doesn’t remove management—it redefines it around sense-making, coaching, and cultural cohesion.

Implications for Startups and Scale-Ups

While enterprises grapple with governance and reskilling, startups are already operating in an AI-native way.

Evidence from recent natural experiments shows that AI-enabled startups raise funding faster and with leaner teams. The cost of experimentation drops, enabling more rapid iteration but also more intense competition.

The typical AI-native startup now runs with a small human core and an AI-agent ecosystem handling customer support, QA, and documentation. The operating model is flatter, more fluid, and relentlessly data-driven.

Yet the advantage is not automatic. As entry barriers fall, differentiation depends on execution, brand, and customer intimacy. Startups that harness AI for learning loops—testing, improving, and scaling through real-time feedback—will dominate the next wave of digital industries.

Leadership Imperatives – Building AI-Enabled Work Systems

For leaders, the challenge is no longer whether to use AI, but how to design work and culture around it. Five imperatives stand out:

- Redesign workflows, not just add tools. Map where AI fits within existing processes and where human oversight is non-negotiable.

- Build the complements. Create shared prompt libraries, custom GPTs, structured review protocols, and access to verified data.

- Run controlled pilots. Test AI augmentation in defined workstreams, measure speed, quality, and engagement, and scale what works.

- Empower and educate. Treat AI literacy as a strategic skill—every employee a prompt engineer, every manager a sense-maker.

- Lead the culture shift. Encourage experimentation, transparency, and open dialogue about human-machine collaboration.

Closing Thought

AI will not replace humans or teams. But it will transform how humans and teams create value together.

The future belongs to organisations that treat AI not as an external technology, but as an integral part of their work design and learning system. The next generation of high-performing enterprises—large and small—will be those that master this new choreography between human judgment and machine capability.

AI won’t replace teams—but teams that know how to work with AI will outperform those that don’t.

More on this in one of my next newsletters.