Enterprises around the world are racing to deploy generative AI. Yet most remain stuck in the pilot trap; experimenting with copilots and narrow use cases while legacy operating models, data silos, and governance structures stay intact. The results are incremental: efficiency gains without strategic reinvention.

With the rapidly developing context aware AI we also can chart different course — making AI not an add-on, but the center of how the enterprise thinks, decides, and operates. This shift, captured powerfully in The AI-Centered Enterprise (ACE) by Ram Bala, Natarajan Balasubramanian, and Amit Joshi (IMD), signals the next evolution in business design: from AI-enabled to AI-centered.

The premise is bold. Instead of humans using AI tools to perform discrete tasks, the enterprise itself becomes an intelligent system, continuously sensing context, understanding intent, and orchestrating action through networks of people and AI agents. This is the next-generation operating model for the age of context-aware intelligence and it will separate tomorrow’s leaders from those merely experimenting today.

What an AI-Centered Enterprise Is

At its core, an AI-centered enterprise is built around Context-Aware AI (CAI), systems that understand not only content (what is being said) but also intent (why it is being said). These systems operate across three layers:

- Interaction layer: where humans and AI collaborate through natural conversation, document exchange, or digital workflow.(ACE)

- Execution layer: where tasks and processes are performed by autonomous or semi-autonomous agents.

- Governance layer: where policies, accountability, and ethical guardrails are embedded into the AI fabric.

The book introduces the idea of the “unshackled enterprise” — one no longer bound by rigid hierarchies and manual coordination. Instead, work flows dynamically through AI-mediated interactions that connect needs with capabilities across the organization. The result is a company that can learn, decide, and act at digital speed — not by scaling headcount, but by scaling intelligence.

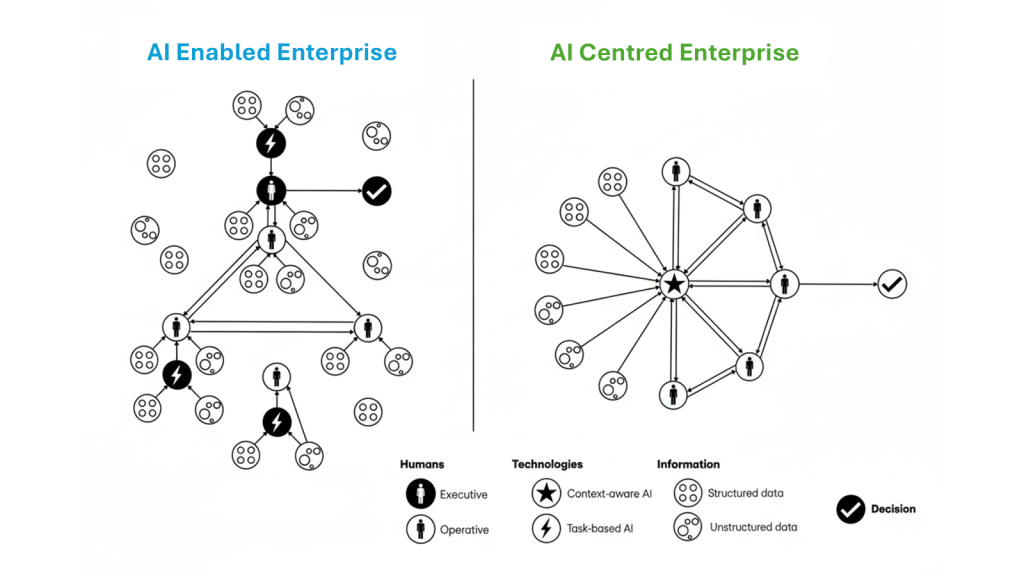

This is a profound departure from current “AI-enabled” organizations, which mostly deploy AI as assistants within traditional structures. In an AI-centered enterprise, AI becomes the organizing principle, the invisible infrastructure that drives how value is created, decisions are made, and work is executed.

How It Differs from Today’s Experiments

Today’s enterprise AI landscape is dominated by point pilots and embedded copilots: productivity boosters designed onto existing processes. They enhance efficiency but rarely transform the logic of value creation.

An AI-centered enterprise, by contrast, rebuilds the transaction system of the organization around intelligence. Key differences include:

- From tools to infrastructure: AI doesn’t automate isolated tasks; it coordinates entire workflows; from matching expertise to demand, to ensuring compliance, to optimizing outcomes.

- From structured data to unstructured cognition: Traditional analytics rely on structured databases. AI-centered systems start with unstructured information (emails, documents, chats) extracting relationships and meaning through knowledge graphs and retrieval-augmented reasoning.

- From pilots to internal marketplaces: Instead of predefined processes, AI mediates dynamic marketplaces where supply and demand for skills, resources, and data meet in real time, guided by the enterprise’s goals and policies.

The result is a shift from human-managed bureaucracy to AI-coordinated agility. Decision speed increases, friction falls, and collaboration scales naturally across boundaries.

What It Takes: The Capability and Governance Stack

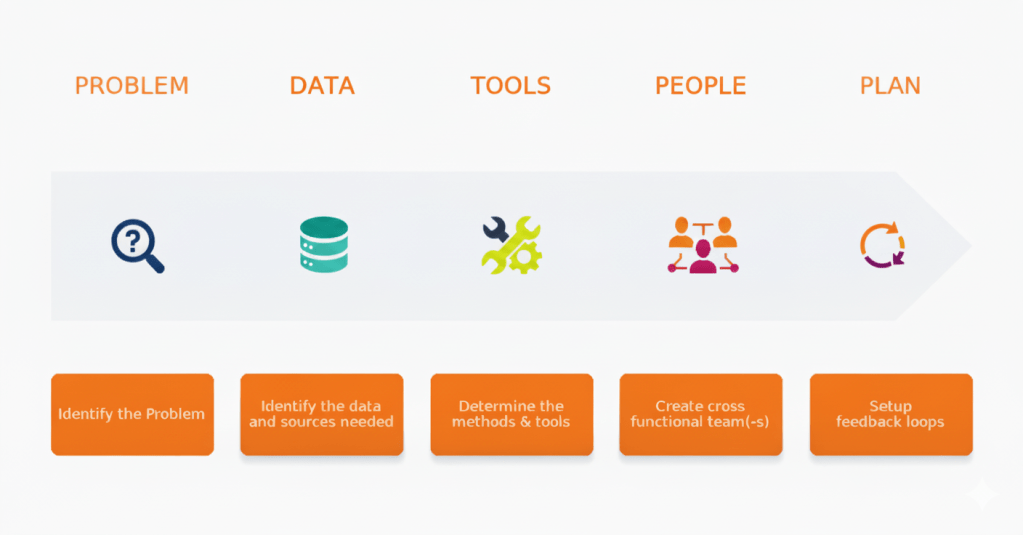

The authors of The AI-Centered Enterprise propose a pragmatic framework for this transformation, the 3Cs: Calibrate, Clarify, and Channelize.

- Calibrate – Understand the types of AI your business requires. What decisions depend on structured vs. unstructured data? What precision or control is needed? This step ensures technology choices fit business context.

- Clarify – Map your value creation network: where do decisions happen, and how could context-aware intelligence change them? This phase surfaces where AI can augment, automate, or orchestrate work for tangible impact.

- Channelize – Move from experimentation to scaled execution. Build a repeatable path for deployment, governance, and continuous improvement. Focus on high-readiness, high-impact areas first to build credibility and momentum.

Underneath the 3Cs lies a capability stack that blends data engineering, knowledge representation, model orchestration, and responsible governance.

- Context capture: unify data, documents, and interactions into a living knowledge graph.

- Agentic orchestration: deploy systems of task, dialogue, and decision agents that coordinate across domains.

- Policy and observability: embed transparency, traceability, and human oversight into every layer.

Organizationally, the AI-centered journey requires anchored agility — a balance between central guardrails (architecture, ethics, security) and federated innovation (business-owned use cases). As with digital transformations before it, success depends as much on leadership and learning as on technology.

Comparative Perspectives — and Where the Field Is Heading

The ideas in The AI-Centered Enterprise align with a broader shift seen across leading research and consulting work, a convergence toward AI as the enterprise operating system.

McKinsey: The Rise of the Agentic Organization

McKinsey describes the next evolution as the agentic enterprise; organizations where humans work alongside fleets of intelligent agents embedded throughout workflows. Early adopters are already redesigning decision rights, funding models, and incentives to harness this new form of distributed intelligence.

Their State of AI 2025 shows that firms capturing the most value have moved beyond pilots to process rewiring and AI governance, embedding AI directly into operations, not as a service layer.

BCG: From Pilots to “Future-Built” Firms

BCG’s 2025 research (Sep 2025) finds that only about 5% of companies currently realize sustainable AI value at scale. Those that do are “future-built”, treating AI as a capability, not a project. These leaders productize internal platforms, reuse components across business lines, and dedicate investment to AI agents, which BCG estimates already generate 17% of enterprise AI value, projected to reach nearly 30% by 2028.

This mirrors the book’s view of context-aware intelligence and marketplaces as the next sources of competitive advantage.

Harvard Business Review: Strategy and Human-AI Collaboration

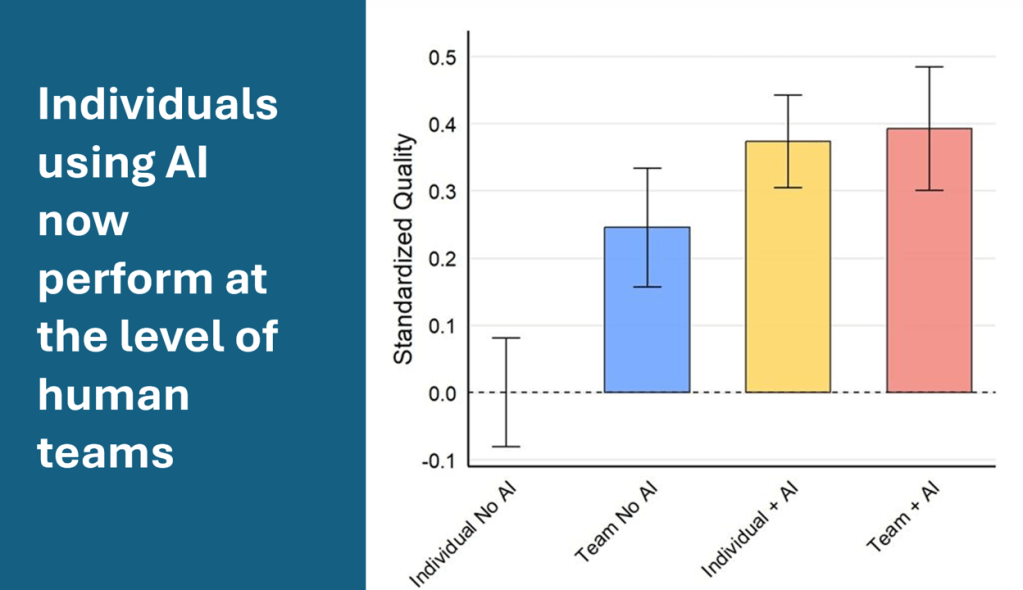

HBR provides the strategic frame. In Competing in the Age of AI, Iansiti and Lakhani show how AI removes the traditional constraints of scale, scope, and learning, allowing organizations to grow exponentially without structural drag. Wilson and Daugherty’s Collaborative Intelligence adds the human dimension, redefining roles so that humans shift from operators to orchestrators of intelligent systems.

Convergence – A New Operating System for the Enterprise

Across these perspectives, the trajectory is clear:

- AI is moving from tools to coordination system capabilities.

- Work will increasingly flow through context-aware agents that understand intent and execute autonomously.

- Leadership attention is shifting from proof-of-concept to operating-model redesign: governance, role architecture, and capability building.

- The competitive gap will widen between firms that use AI to automate tasks and those that rebuild the logic of their enterprise around intelligence.

In short, the AI-centered enterprise is not a future vision — it is the direction of travel for every organization serious about reinvention in the next five years.

The AI-Centered Enterprise – A Refined Summary

The AI-Centered Enterprise (Bala, Balasubramanian & Joshi, 2025) offers one of the clearest playbooks yet for this new organisational architecture. The authors begin by defining the limitations of today’s AI adoption — fragmented pilots, structured-data basis, and an overreliance on human intermediaries to bridge data, systems, and decisions.

They introduce Context-Aware AI (CAI) as the breakthrough: AI that understands not just information but the intent and context behind it, enabling meaning to flow seamlessly across functions. CAI underpins an “unshackled enterprise,” where collaboration, decision-making, and execution happen fluidly across digital boundaries.

The book outlines three core principles:

- Perceive context: Use knowledge graphs and natural language understanding to derive meaning from unstructured information — the true foundation of enterprise knowledge.

- Act with intent: Deploy AI agents that can interpret business objectives, not just execute instructions.

- Continuously calibrate: Maintain a human-in-the-loop approach to governance, ensuring AI decisions stay aligned with strategy and ethics.

Implementation follows the 3C framework — Calibrate, Clarify, Channelize — enabling leaders to progress from experimentation to embedded capability.

The authors conclude that the real frontier of AI is not smarter tools but smarter enterprises; organizations designed to sense, reason, and act as coherent systems of intelligence.

Closing Reflection

For executives navigating transformation, The AI-Centered Enterprise reframes the challenge. The question is no longer how to deploy AI efficiently, but how to redesign the enterprise so intelligence becomes its organizing logic.

Those who start now, building context-aware foundations, adopting agentic operating models, and redefining how humans and machines collaborate, will not just harness AI. They will become AI-centered enterprises: adaptive, scalable, and truly intelligent by design.