September 2025, I received two diplomas: IMD’s AI Strategy & Implementation and Nyenrode University’s Corporate Governance for Supervisory Boards. I am proud of both—more importantly, they cap off a period where I have deliberately rebuilt how I learn.

With AI accelerating change and putting top-tier knowledge at everyone’s fingertips, the edge goes to leaders who learn—and apply—faster than the market moves. In this issue I am not writing theory; I am sharing my learning journey of the past six months—what I did, what worked, and the routine I will keep using. If you are a leader, I hope this helps you design a learning system that fits a busy executive life.

My Learning System – 3 pillars

1) Structured learning

This helped me to gain the required depth:

- IMD — AI Strategy & Implementation. I connected strategy to execution: where AI creates value across the business, and how to move from pilots to scaled outcomes. In upcoming newsletters, I will go share insights on specific topics we went deep on in this course.

- Nyenrode — Corporate Governance for Supervisory Boards. I deepened my view on board-level oversight—roles and duties, risk/compliance, performance monitoring, and strategic oversight. I authored my final paper on how to close the digital gap in supervisory boards (see also my earlier article)

- Google/Kaggle’s 5-day Generative AI Intensive. Hands-on labs demystified how large language models work: what is under the hood, why prompt quality matters, where workflows can break, and how to evaluate outputs against business goals. It gave understanding how to improve the use of these models.

2) Curated sources

This extended the breadth of my understanding of the use of AI.

2a. Books

Below I give a few examples, more book summaries/review, you can find on my website: www.bestofdigitaltransformation.com/digital-ai-insights.

- Co-Intelligence: a pragmatic mindset for working with AI—experiment, reflect, iterate.

- Human + Machine: how to redesign processes around human–AI teaming rather than bolt AI onto old workflows.

- The AI-Savvy Leader: what executives need to know to steer outcomes without needing to code.

2b. Research & articles

I built a personal information base with research from: HBR, MIT, IMD, Gartner, plus selected pieces from McKinsey, BCG, Strategy&, Deloitte, and EY. This keeps me grounded in capability shifts, operating-model implications, and the evolving landscape.

2c. Podcasts & newsletters

Two that stuck: AI Daily Brief and Everyday AI. Short, practical audio overviews with companion newsletters so I can find and revisit sources. They give me a quick daily pulse without drowning in feeds.

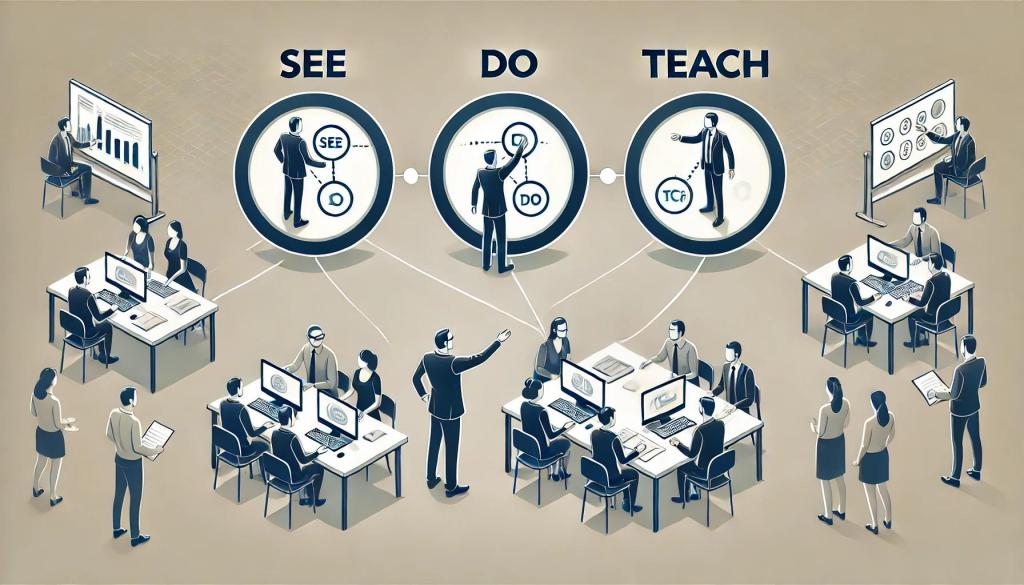

3) AI as my tutor

I am using AI to get personalised learning support.

3a. Explain concepts. I use AI to clarify ideas, contrast approaches, and test solutions using examples from my context.

3b. Create learning plans. I ask for step-by-step learning journeys with milestones and practice tasks tailored to current projects.

3c. Drive my understanding. I use different models to create learning content, provide assignments, and quiz me on my understanding.

How my journey unfolded

Here is how it played out.

1) Started experimenting with ChatGPT.

I was not an early adopter; I joined when GPT-4 was already strong. Like many, I did not fully trust it at first. I began with simple questions and asked the model to show how it interpreted my prompts. That built confidence without creating risks/frustration.

2) Built foundations with books.

I read books like Co-Intelligence, Human + Machine, and The AI-Savvy Leader. These created a common understanding for where AI helps (and does not), how to pair humans and machines, and how to organise for impact. For all the books I created reviews, to anchor my learnings and share them in my website.

3) Added research and articles.

I set up a repository with research across HBR/MIT/IMD/Gartner and selected consulting research. This kept me anchored in evidence and applications, and helped me track the operational implications for strategy, data, and governance.

4) Tried additional models (Gemini and Claude).

Rather than picking a “winner,” I used them side by side on real tasks. The value was in contrast—seeing how different models frame the same question, then improving the final answer by combining perspectives. Letting models critique each other surfaced blind spots.

5) Went deep with Google + Kaggle.

The 5-day intensive course clarified what is under the hood: tokens/vectors, why prompts behave the way they do, where workflows tend to break, and how to evaluate outputs beyond “sounds plausible.” The exercises translated directly into better prompt design and started my understanding of how agents work.

6) Used NotebookLM for focused learning.

For my Nyenrode paper, I uploaded the key articles and interacted only with that corpus. NotebookLM generated grounded summaries, surfaced insights I might have missed, and reduced the risk of invented citations (by sticking to the uploaded resources). The auto-generated “podcast” is one of the coolest features I experienced and really helps to learn about the content.

7) Added daily podcasts/newsletters to stay current.

The news volume on AI is impossible to track end-to-end. AI Daily Brief and Everyday AI give me a quick scan each morning and links worth saving for later deep dives. This provides the difference between staying aware versus constantly feeling behind.

8) Learned new tools and patterns at IMD.

- DeepSeek helped me debug complex requests by showing how the model with reasoning interpreted my prompt—a fantastic way to unravel complex problems.

- Agentic models like Manus showed the next step: chaining actions and tools to complete tasks end-to-end.

- CustomGPTs (within today’s LLMs) let me encode my context, tone, and recurring workflows, boosting consistency and speed across repeated tasks.

Bring it together with a realistic cadence.

Leaders do not need another to-do list; they need a routine that works. Here is the rhythm I am using now:

Daily

- Skim one high-signal newsletter or listen to a podcast.

- Capture questions to explore later.

- Learn by doing with the various tools.

Weekly

- Learn: read one or more papers/articles on various Ai related topics

- Apply: use one idea on a live problem; interact with AI on going deeper

- Share: create my weekly newsletter, based on my learnings

Monthly

- Pick one learning topic read a number of primary sources, not just summaries.

- Draft an experiment: with goal, scope, success metric, risks, and data needs. Using AI to pressure-test assumptions.

- Review with a thought leaders/colleagues for challenge and alignment.

Quarterly

- Read at least one book that expands your mental models.

- Create a summary for my network. Teaching others cements my own understanding.

(Semi-) Annualy

- Add a structured program or certificate to go deep and to benefit from peer debate.

Closing

The AI era compresses the shelf life of knowledge. Waiting for a single course is no longer enough. What works is a learning system: structured learning for depth, curated sources for breadth, and AI as your tutor for speed. That has been my last six months, and it is a routine I will continue.